The house that NVIDIA, TSMC and ASML Built!

Hardware infra stack powering the AI and ML Models (such as Transformers) and why it is incredibly difficult to replicate?

Most likely, if you are reading this essay, you are likely to hold different opinions about the companies I have mentioned or maybe none at all.

You're likely to associate NVIDIA with gaming, but it actually specializes in machine learning.

There is a high probability that you were unaware of the impact of TSMC's (Taiwan Semiconductor Manufacturing Company) contribution globally (before the China Chip ban). From iPhones, and GPUs to fighter jets, its microchips power most computing devices.

About ASML - well, let's say it's the most sophisticated technology company we've never heard of.

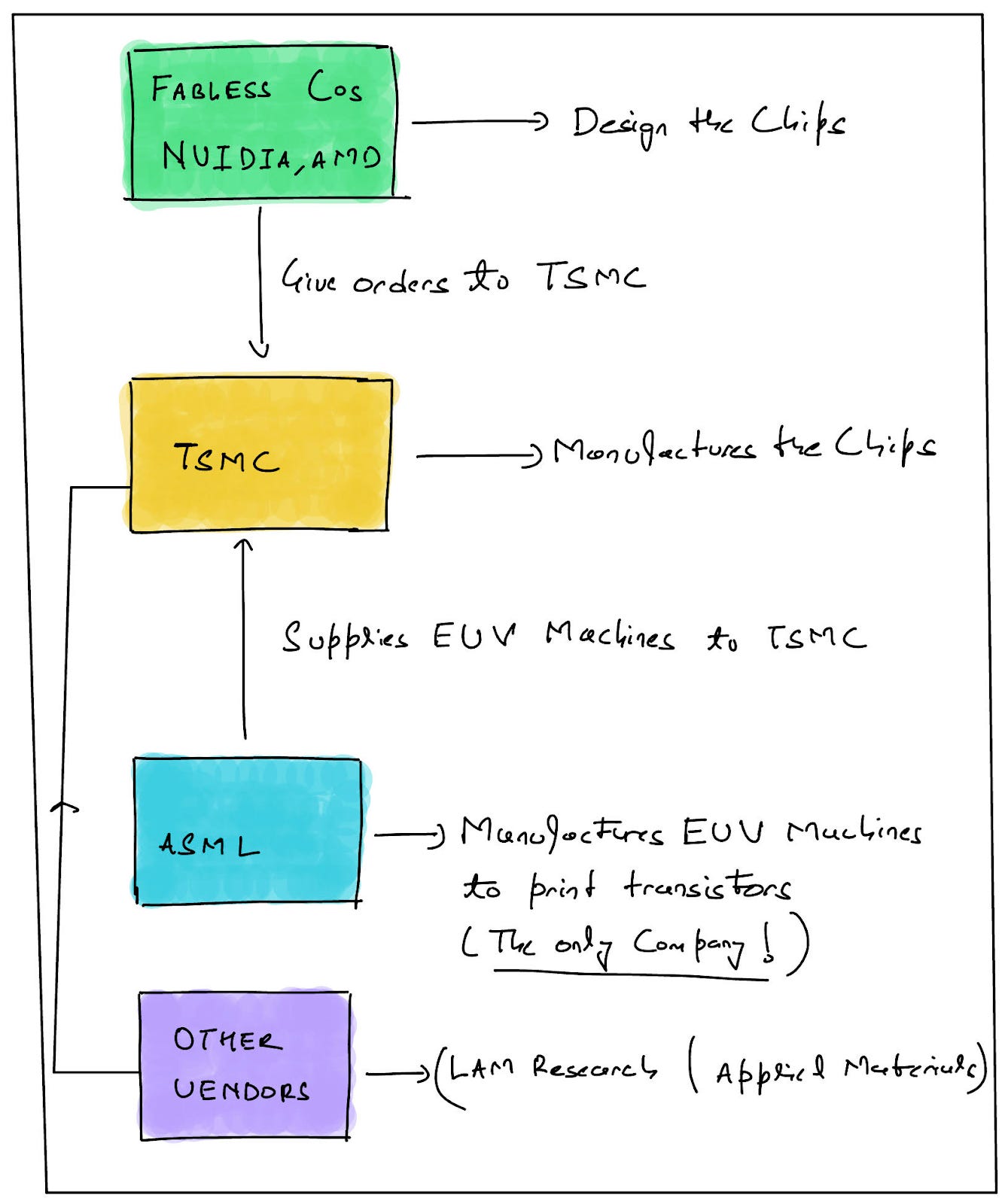

Even before we dive deep into the subject, I'd like to spend some time discussing why we are covering this subject in the first place. One of the interesting things that has happened in the past few months is a Cambrian explosion of Generative AI applications. This is obviously driven by the fact that the availability of computing power has led to an increase in model size by ~15.5KX in the last three years. The big breakthrough in deep learning was the invention of Transformer models. These are a type of models that are very good at processing and understanding natural language, such as the type of language that people use to communicate with each other. Now guess the hardware that powers such models - It's the GPUs built by NVIDIA (and obviously a few others). They are the workhorses of the AI computing industry and are usually considered the gold standard. The company that builds the chips that go into NVIDIA's GPUs is Taiwan Semiconductor Manufacturing Company (TSMC). I won't waste your time with another trivia, as you may already be able to guess the answer. ASML is the only company that builds EUV machines that power the photolithographic operations of the chip-making process in TSMC's Fabs. Photolithography is the most critical process of chip making. Below is a good illustration of the most unique supply chain we have ever (or probably never) heard of.

NVIDIA - The Machine Learning (and also the gaming) company

There was a huge backlash in the gaming community post NVIDIA’s GTC keynote last year. A part of the frustration came from NVIDIA’s ever-increasing graphics card prices, but an even bigger part came from their strong focus on the Datacenter business - the one that caters to AI and machine learning applications. Let me throw an interesting stat around this - Jensen Huang, CEO of NVIDIA, spent precisely 20 minutes on the gaming division of the 97-minute-long presentation. This is when the gaming division still contributes to ~45-50% of the revenue that NVIDIA generates. Interestingly there is a reason towards this sharp tilt - the Datacentre division has grown ~3X in terms of annual revenue in the last three years and is expected to contribute a lion's share as AI/ML applications get more integrated. So how did NVIDIA reach here?

NVIDIA came up with the first programmable Graphics Card and CUDA (NVIDIA’s software system to program GPU), making it incredibly easy for programmers to write embarrassingly parallelized software (as all modern AI software is). It ensures forward and backward compatibility so that older chips can still run newer software and vice versa. Let me explain this in simpler terms - Typically the CPU (the power horses of computers) fed information to Gaming Cards and pixels came out as the product. In reality, the GPU was doing a tremendous amount of basic computation but the computation was completely married to the specific game library. As the games/library evolved, those graphics cards were rendered useless, so NVIDIA created a programmable graphics card. Interestingly NVIDIA had to create a programming language and the whole architecture around this called CUDA (because obviously, no one had imagined this). It was probably after six years of inception that NVIDIA realised that there was another use case for these programmable graphics cards - i.e. powering AI and ML infrastructure.

In simplest terms, the CPU of the computing device is the brain, which can execute highly versatile tasks and assign tasks. However, it has a limited number of cores implying it cannot do multiple things at a time (try running slack and working on 1GB excel file in parallel and then come chat with me). GPU on the other hand can do very specific tasks but a lot of them in parallel. Most of the CPUs have 4 cores (and the most powerful of them has probably 48 of those) but NVidia’s top-of-the-line GTX 1080 Ti GPU has over 3,500 shaders running at nearly 1.5 GHz., implying that it can execute over 3,500 individual programs at speeds approaching CPUs. One of the best examples of an embarrassingly parallelised problem statement is how a GPU can be used for encryption. If you want to discover a key for encryption, you probably pick up a combination and check if it is right or not. Now CPUs are really smart and can handle all sorts of logic but most of the CPUs in the world have 2 to 4 cores - entailing that it can just guess 4 combinations at a time. While a GPU can perform a narrower set of tasks - a GTX 1080 can guess 3500 combinations at a time. GPUs are just better at repetitive tasks. It just so happened that a lot of machine learning is just like that (and yes Ethereum mining as well!).

As a result of this development, NVIDIA built the overall infrastructure for GPU computing (and hence AI infra) - a higher-level language, APIs, and compilers for data centre operators. NVIDIA also built the programming platform CUDA to program shaders (The Cores of a GPU). Using the CUDA platform, programmers can access the parallel processing power of NVIDIA's GPUs without having to learn graphics programming languages (such as OpenGL). The reason it is necessary is that parallelizing machine learning is highly essential. If you love the subject - I highly recommend reading Ben Thompson’s essay (Link) that he published last year. The essay talks about the three levels of the NVIDIA Stack and how it enables the most computationally intensive processes - i.e. from training trillion parameter AI models to Computational Biology

TSMC - A generational defining company that transformed the chip-making industry

We have talked a lot about NVIDIA, but let's just say that an NVIDIA-like company exists only because of TSMC. Earlier companies such as Intel designed, manufactured, and sold chips as part of a full stack approach. Getting into chip-making was extremely hard because of huge barriers to entry driven by insane capital intensity dynamics. TSMC unbundled the whole process and became “Chip Manufacturer as a Service” for Fabless companies such as NVIDIA, AMD, Apple and others. These companies designed their own chips and worked in conjunction with companies such as TSMC to manufacture them at scale. (Fun fact: NVIDIA to date has only raised US$20M as part of private funding). Last month, Apple's CEO Tim Cook and US President Biden inaugurated one of TSMC’s newest Fabs in Arizona, where the company plans to invest over US$40B in the coming years (Fab is the actual building where semiconductors are made; Foundries are companies that sell that building’s production as a service). Additionally, you may be aware that the Biden administration enacted sweeping export controls to undermine China's ability to manufacture leading-edge nodes (aka the most advanced chips). Companies such as TSMC, ASML, Lam Research Corp were either directly or indirectly impacted due to these restrictions, requiring them to halt any shipments related to advanced chips. So how did we reach here?

Manufacturing Chips and Replicating Semiconductor Supply Chain is incredibly hard

It's probably one of the few industries where suppliers command huge power due to the unique capabilities required to manufacture chips. Obviously, this is coupled with the mind-boggling capital expenditure required to create the infrastructure to produce advanced nodes (aka chips). But you just can’t buy your way into making chips. Below is a chart that lists the number of companies that can produce lagging edge nodes (say chips that go into say your vehicles | >60NM) vs leading-edge nodes (say chips that go into your Apple Macbook, NVIDIA’s GPUs). Lesser the gate length, the more the number of transistors that can be packed into a chip, enabling it to be much more powerful. For eg. a 5NM chip is much more powerful than a 28NM chip. The number of players with leading-edge manufacturing capabilities is reducing with time driven by the complexity of building high-quality semiconductors. Fun fact for you: TSMC is the only company that can produce 5NM chips at high-quality throughput (more on this later). Additionally, TSMC, most likely, will be the only company that will be able to build 3NM chips in the coming years (and maybe only followed by Intel which is typically 3-5 years behind). Let's take a closer look at some of the reasons for this phenomenon.

Its Freakishly Expensive

Fabs are incredibly expensive to build (north of ~US$12B in Capex for 20K wafers per month). Although venture capital was used for the first time to fund chip companies, it seamlessly transitioned to the software industry. As a rule of thumb, the entire Fab facility depreciates over the span of five to ten years until the cost of producing a chip reaches a marginal level. For Fabs to be economically viable, they should be operated for as long as possible. For eg, Fab 2 of TSMC (that is a 150NM facility) was built in 1990 but is still up and running. it’s among 6 of the 26 facilities that are over 20 years old. It is true that the 150NM chips don't sell for a high price, but almost all the revenue generated through sales is pure profit since the Fab has fully depreciated and the marginal cost of producing a chip is negligible. ASML in its annual report published the Capex that will be incurred by Chip Manufacturers (such as TSMC) on the upcoming fab facilities (and buoy it’s expensive!). The price tag of US$12B on the Arizona facility is only the first phase, which will produce 20K wafers a month. As the project progresses, more capacity will be added

The Capex can be divided into the key broad categories - The construction of the building itself along with the Clean Room (where the actual manufacturing happens), The Purchase of Equipment, which is super expensive, The utility costs and The Labor costs in building the whole facility (along with the utilities). Let’s further try to estimate the CAPEX profile.

Equipment Costs: Photolithographic equipment accounts for most of the equipment costs. The EUV machines are responsible for printing transistors on the wafers. There is only one company that builds such equipment (ASML). Each piece of equipment can cost up to US$300M and a Fab may need around 15-20 of these. Production cycle time determines the number of machines. The more machines there are, the shorter the production cycle time. As TSMC caters to the world's largest fabless chip companies (such as NVIDIA and others), they need to have lower cycle times.

Deep Ultraviolet Machines costs - US$100M to US$150M a piece

Extreme Ultraviolet Machines Costs - US$200M to US$300M a piece depending on the node

Labour Costs: Construction of a Fab of this capacity typically takes 4-5 years. There will be an additional two years of work to set up the tools, plan utilities, portion clean rooms, etc.

Building Costs: This involves the construction of Clean Rooms, which is the actual place where semiconductors are manufactured. This typically costs ~US$1B to US$1.5B. Additionally, one needs ~2-4M Gallons of water per day to produce wafers. So a huge recycling facility is needed in order to reuse the water. There needs to be a facility to convert raw water into ultra-pure water for the process to work. Setting up this facility can cost more than US$800M on top of incurred expenses on construction.

Utility Costs: This involves maintaining the classification of the clean room - No dust or temperature, and adhering to special installation requirements during the installation of the machine. Additionally, all other resources when the construction phase going on

One may argue that one can buy their way into building this infrastructure but it’s nearly impossible (especially advanced nodes) to replicate the supply chain needed to produce Chips at scale.

Almost impossible to replicate Supply Chain

It’s probably one of the very few industries where there are far fewer suppliers than the customers (Note that these customers include the likes of Apple, NVIDIA and Others). Manufacturing advanced nodes is a long learning process. It's one of those industries, where customers invest in their suppliers. Like ASML (the company that manufactures machines installed in Fabs) required a heavy co-investment from their customers (TSMC, Intel, Samsung), Fabs require co-investment and risk sharing of capacity with their customers. The semiconductor industry has moved towards the NCNR model (Non-Cancellable, Non-Refundable Model) due to the expensive nature of the business. This kind of environment just cannot be created by spending tons of money. For eg. both ASML and TSMC have to work together constantly to ensure that the chip production just works fine. There is a concept of risk production phase when a node is launched. In this phase, which typically lasts for 3 - 4 years, the yield is extremely poor and it is improved constantly. By most estimates, this hovers around ~25% to 30%. Typically many customers (such as NVIDIA, AMD and others) are supposed to give small prototype orders to TSMC. The processes (and steps) are optimised and pieces of equipment are modified (by suppliers such as ASML) in order to improve the yields and make the yield reach up to at least 80%. It's very interesting that customers are also expected to assist foundries like TSMC in risk production phases. The company generates revenue from prototyping (paid for by its customers). The revenue from this phase is not as high as the revenue from HVM (High Volume Manufacturing). This is due to the fact that the number of wafers being ordered will be lower. This process requires considerable collaboration between TSMC and its vendors (such as ASML). This makes it incredibly difficult to replicate this supply chain even when countries like China are capable of high CAPEX commitments. The value chain for the leading edge node is characterized by incredible supplier concentration. Below is a flow chart that depicts the broad value chain.

Several aspects of the value chain are serviced by only one supplier across the globe because of the sheer complexity of technology. For eg. ASML is the only company that builds EUV Machines (Extreme Ultra Violet Machines) required to print transistors in an advanced node. To give you a more detailed view, here is a simplified map of suppliers by each part of the value chain.

Software used to design the chips

There are only two companies (Cadence or Synopsys) in the world that create software solutions for chip designing. Fabless companies use these software solutions to design their chips. Furthermore, both companies are based in California, so your supply chain is always dependent on U.S. goodwill.

Photolithography Process powered by ASML (The most innovative company you have never heard of)

There is only one company in the world that manufactures extreme ultraviolet (EUV) lithography equipment. It's called Advanced Semiconductor Materials Lithography (ASML). Photolithography is a process of using light to print transistors on silicon wafers. However, there is one fundamental problem - The wavelength of visible light is 193NM, so how does one print a transistor with gate length <= 5NM? It is ASML that builds these EUV machines that can do this. The technology is so complex that it only became commercially viable after 30 years of conceptualization (in 1986). To put things into perspective, a EUV machine is made up of more than 100K parts and It takes 4 Boeing 747s to transport one machine. The ASML crew works in a deeply integrated way with the Foundries to install and make these machines work. Below is the process of lithography described by the Brookings.

“An extreme ultraviolet lithography machine is a technological marvel. A generator ejects 50,000 tiny droplets of molten tin per second. A high-powered laser blasts each droplet twice. The first shapes the tiny tin, so the second can vaporize it into plasma. The plasma emits extreme ultraviolet (EUV) radiation that is focused into a beam and bounced through a series of mirrors. The mirrors are so smooth that if expanded to the size of Germany they would not have a bump higher than a millimeter. Finally, the EUV beam hits a silicon wafer—itself a marvel of materials science—with a precision equivalent to shooting an arrow from Earth to hit an apple placed on the moon. This allows the EUV machine to draw transistors into the wafer with features measuring only five nanometers—approximately the length your fingernail grows in five seconds. This wafer with billions or trillions of transistors is eventually made into computer chips.

EUV lithography technology has been in development since the 1980s but entered mass production only in the last two years. Other companies make older generations of lithography machines that don’t use EUV and can only make older generations of less cost-effective chips. These companies include venerable firms such as Nikon and Canon. They have the experience, expertise, and market discipline that comes from decades of profitability in a competitive industry under extreme technological demands. If these companies could make EUV machines, they would—it would make them billions of dollars. This is also why, after more than 30 years of development and billions of dollars in R&D, ASML still faces such a backlog of orders: They are hard to make. EUV machines are at the frontier of human technological capabilities.”

Such is the complexity of technology that there is only one company in the world that produces mirrors to deflect Ultraviolet Radiation. The name of the company is Zeiss (and it is based out of Germany).

A country like China (which is well capable to commit insane amounts of capital) will need to create a whole ecosystem of chip manufacturing processes, including really high-quality talent with hyper specialisation. This ecosystem would consist of TSMC, ASML, Lam Research, Applied Materials, and Tokyo electronics. They may have to go a level deeper and create an ecosystem of companies such as Zeiss.

So what’s the future?

The proliferation of AI across civilian and defence use cases entails that there will be likely a huge expansion at the compute layer. Most of the tech monopolies are trying to follow a vertical approach. These companies are trying to create their end-to-end AI infrastructure (like the one created by NVIDIA, powered by TSMC and ASML).

Google, too, has invested in hardware - the TPU (Tensor Processing Unit) has been designed specifically to work with Google's TensorFlow network. TPUs are an effort to capture end-to-end AI stack. TPUs are application-specific integrated circuits that accelerate machine learning workloads - providing AI services on Google Cloud. To ensure that Google can capitalise on this technology, these TPUs can only be rented with Google cloud services.

AWS is also building AI infrastructure. Although they are further behind the race, they acquired Annapurna Labs - an Israeli semiconductor company to build their own hardware meant to provide an end-to-end AI stack in conjunction with the AWS platform.

Countries are pushing for semiconductor sovereignty and trying to replicate the supply chain that we earlier talked about. While I believe there is a potential to localise this supply chain for lagging edge nodes (used in vehicles and other equipment), we are still further away from localising the supply chain for leading-edge nodes, which will be necessary for powering AI/ML applications. Below is the chart showing capital commitments by different countries.

The jury is still out on whether this hardware infrastructure stack can get replicated or not. Nevertheless, we can expect significant government investments to localize this hardware stack, and who knows, perhaps new companies will emerge in these environments.

Please feel free to reach out to me in case you want to debate some of these ideas!